Awakened Intelligence #005

New models, new libraries and mobile friendly approach - A PoorGPUguy weekly dose of cutting-edge open-source AI news & insights

Welcome back to our weekly dose of Open Source AI.

In the past days many new models and advancements have been done: some new amazing proprietary models and few awesome Open Source ones.

I want to start sharing with you a secret… yes from the start!

Sometime old does not mean worse!

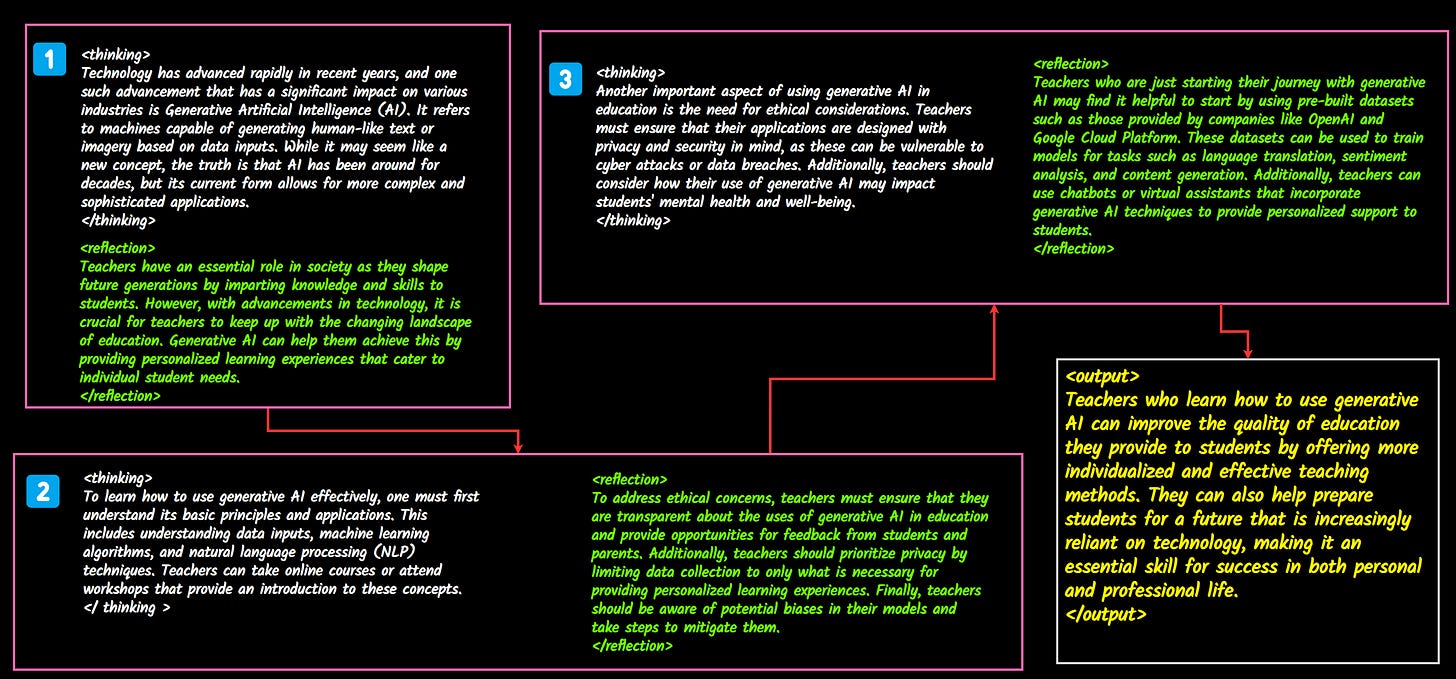

I will discuss today with you about new models and approaches, but I recently tried the Reflection prompt with the good old orca-mini-3B… This model is from June 2023, and yet it works like a charm, better than models trained with Reflection dataset today. Look at this:

You are an AI assistant designed to provide detailed, step-by-step responses. Your outputs should follow this structure:

1. Begin with a <thinking> section.

2. Inside the thinking section:

a. Briefly analyze the question and outline your approach.

b. Present a clear plan of steps to solve the problem.

c. Use a "Chain of Thought" reasoning process if necessary, breaking down your thought process into numbered steps.

3. Include a <reflection> section for each idea where you:

a. Review your reasoning.

b. Check for potential errors or oversights.

c. Confirm or adjust your conclusion if necessary.

4. Be sure to close all reflection sections.

5. Close the thinking section with </thinking>.

6. Provide your final answer in an <output> section.

Always use these tags in your responses. Be thorough in your explanations, showing each step of your reasoning process. Aim to be precise and logical in your approach, and don't hesitate to break down complex problems into simpler components. Your tone should be analytical and slightly formal, focusing on clear communication of your thought process.

Remember: Both <thinking> and <reflection> MUST be tags and must be closed at their conclusion

Make sure all <tags> are on separate lines with no other text. Do not include other text on a line containing a tag.

user question: explain why it is crucial for teachers to learn how to use generative AI for their job and for the future of education. Include relevant learning path for teachers and educators.

And here the reply, of this 15 months old model 😱👏

If you are curious you can check my personal benchmarks for this model on my github.

Enough with the introduction. In this newsletter we are going deeper on the the new mobile friendly models (and how to run them on your phone), and some new libraries in the Generative AI world.

1.Small Language Models

> Facebook MobileLLM overview

> SmolLM2

How to use SmolLM2

2. OuteTTS-0.1-350M - Yet not another Text To Speech Model

> How to use the model

3.DOCLING - IBM intelligent document parsing

4.Gift of the weekA week of impressive small LMs

Facebook/Meta released MobileLLM (125M, 350M, 600M, 1B)

Hugging Face released SmolLM2 (135M, 360M, 1.7B)

AMD released AMD OLMo (1B)

I cannot tell you too much about the facebook models: we don’t have yet a real prompt template to work with. And the demo code in Hugging Face is not that clear.

But I can tell you few things from the well curated paper MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases!

In today's mobile-first world, the demand for efficient and powerful AI models is soaring. However, large language models (LLMs) often require significant computational resources, making them impractical for on-device deployment. The Facebook team research focuses on developing high-quality, compact LLMs specifically designed for mobile devices. They prioritized architecture design over sheer model size, and created a new state-of-the-art model called MobileLLM. These models, with fewer than a billion parameters, surpass previous models in accuracy while maintaining efficiency.

Our innovative weight-sharing technique further boosts performance without increasing model size. These advancements bring us closer to a future where advanced AI capabilities are accessible on everyday mobile devices.

On the other hand, there is a lot I can tell you about the new version 2 of the SmolLM model family: they are simply amazing.

Hugging Face TB Research is a team in the Hub dedicated to open-source project for the Community. The Team explores synthetic datasets, generated by Large Language Models (TB is for Textbook, as inspired by the “Textbooks are all your need” paper).

SmolLM2 is a family of compact language models available in three size: 135M, 360M, and 1.7B parameters. They are capable of solving a wide range of tasks while being lightweight enough to run on-device.

The 1.7B variant demonstrates significant advances over its predecessor SmolLM1–1.7B, particularly in instruction following, knowledge, reasoning, and mathematics.

The biggest advancement in revision 2 is that the context length is extended up to 8k (8192 tokens) and the overall accuracy is improved quite a lot! Don’t trust my words: in the GIFT of the week section I will share with you an article with a comparison on my benchmark between SmolLM-1.7b-instruct and SmolLM2-1.7b-instruct.

How to use them?

This model is quite straight forward and easy to use it with python. There are several ways, and here I will show you only one (the others in the GIFT of the week).

NOTE: in all methods I am using quantized versions, to make sure everyone, regardless of the hardware constraints, can run it.

The model can be downloaded for free directly from the official Hugging Face repository, in its q4 GGUF version. Download the file smollm2–1.7b-instruct-q4_k_m.gguf in a subfolder called model.

The only requirement is llama-cpp-python that you can install with pip.

pip install llama-cpp-python==0.3.1Once it is installed you can open the python REPL from the terminal

pythonThen we import llama-cpp-python, instantiate the model and call the chat_template method. Finally we print the output

from llama_cpp import Llama

llm = Llama(model_path='model/smollm2-1.7b-instruct-q4_k_m.gguf',n_ctx=8192)

stops = ['<|im_end|>']

prompt = [{"role": "user",

"content": "write 3 paragraphs about artificial intelligence."}]

res = llm.create_chat_completion(messages=prompt, temperature=0.1,

repeat_penalty=1.178, stop=stops, max_tokens=500)

print(res['choices'][0]['message']['content'])And what about Olmo AMD? I will give you hands up in next week… I want to test it carefully before saying something not grounded. I don’t trust official metrics! AllenAI is an amazing research Lab, and I know for certain that this is one of the few fully open-source project from the ground-up!

Yet not another Text To Speech Model

OuteTTS-0.1-350M is a novel text-to-speech synthesis model that leverages pure language modeling without external adapters or complex architectures.

It is built upon the LLaMa architecture using Oute3-350M-DEV base model, it demonstrates that high-quality speech synthesis is achievable through a straightforward approach using crafted prompts and audio tokens.

Key Features

Pure language modeling approach to TTS

Voice cloning capabilities

LLaMa architecture

Compatible with llama.cpp and GGUF format

To use it is so simple that it made me cry out of joy. Support to GGUF with a simple pip installation!

pip install llama-cpp-python

pip install outettsAnd to run it, with GGUF version is straight forward: obviously you need to download the GGUF from the official repo too. I saved it in the same project folder directory.

Note that it will also download a tokenizer checkpoint called

wavtokenizer_large_speech_320_24k.ckptlocally (around 1.7 Gb)!

from outetts.v0_1.interface import InterfaceHF, InterfaceGGUF

# Initialize the interface with a GGUF model

interface = InterfaceGGUF("OuteTTS-0.1-350M-Q8_0.gguf") #path/to/model.gguf

# Generate TTS output

# Without a speaker reference, the model generates speech with random speaker # characteristics

output = interface.generate(

text="Hello, am I working?",

temperature=0.1,

repetition_penalty=1.1,

max_length=4096

)

# Play the generated audio

output.play()

# Save the generated audio to a file

output.save("output.wav")You can even clone a voice!

from outetts.v0_1.interface import InterfaceHF, InterfaceGGUF

# Initialize the interface with a GGUF model

interface = InterfaceGGUF("OuteTTS-0.1-350M-Q8_0.gguf") #path/to/model.gguf

# Create a custom speaker from an audio file

speaker = interface.create_speaker(

"path/to/reference.wav",

"reference text matching the audio"

)

# Generate TTS with the custom voice

output = interface.generate(

text="This is a cloned voice speaking",

speaker=speaker,

temperature=0.1,

repetition_penalty=1.1,

max_lenght=4096

)

# Play the generated audio

output.play()

# Save the generated audio to a file

output.save("output.wav")So far PyMuPDF and the latest pymupdf4llm were the top notch libraries to parse documents and convert them into plain text or even structured Markdown. These formats are much better fit to work with Large Language Models pipelines.

But there is a new player in town, directly from IBM! Soon after the amazing Granite3 model family, here another huge contribution of this Company to the LLM stack.

Meet docling

Docling is a new library from @IBM that efficiently parses PDF, DOCX, and PPTX and exports them to Markdown and JSON. It supports advanced PDF understanding and seamless integration with @llama_index and @LangChainAI

Here some advanced features:

Advanced PDF processing: handles layout, reading order, and tables.

Unified document representation for easier processing.

integration with LlamaIndex and LangChain for RAG applications.

Includes OCR for scanned PDFs.

User-friendly CLI and Python API.

You can find more details and an easy quick-start guide on GitHub

As anticipated here my special article on Medium about SmolLM2-1.7b-instruct, with tips and tricks about how to use it and all my benchmark results (including prompt tweaks).

This is only the start!

Hope you will find all of this useful. Feel free to contact me on Medium.

I am using Substack only for the newsletter. Here every week I am giving free links to my paid articles on Medium.

Follow me and Read my latest articles https://medium.com/@fabio.matricardi