Awakened Intelligence #006

LLM on Android/iOS, instruction free datasets, people doing amazing things with LLMs - A PoorGPUguy weekly dose of cutting-edge open-source AI news & insights

Hello everyone, and welcome to our weekly dose of AI. In the past few days I have been busy trying to understand how to use speculative decoding, and see if it is really convenient or not: speculative decoding is a technique that uses a smaller model to speed up the inference of a bigger one. And for people like us, only with CPU, this could be an amazing breakthrough!

Imagine how cool to be able to run a 7B parameters model as fast as a 2B!

But so far I couldn’t understand where is the catch: I know how to run them, but I get no speed improvement (it is supposed to be 2,5x faster…). If you have done it yourself with llama.cpp leave me a message!

But let’s focus of what we can do for now. Here some of the coolest news of the week for AI enthusiasts, like you.

PocketPAL - LLM on your iPhone/Android

I will not stress this enough: after Georgi Gerganov llama.cpp repository, PocketPAL is the real next Generative AI democracy update!

Before llama.cpp no one could have even dream about running an AI without a powerful GPU card. Now everyone has access to the entire Hugging Face catalog of Small/Large Language Models.

But what is PocketPal AI 📱🚀?

PocketPal AI is a pocket-sized AI assistant powered by small language models (SLMs) that run directly on your phone. Designed for both iOS and Android, PocketPal AI lets you interact with various SLMs without the need for an internet connection.

Created by the brilliant work of Asghar Ghorbani this application comes officially release for FREE in both App Store and Google Play store.

With the new latest update we have now 45,000 LLMs at our fingertips! PocketPal now lets you access and download LLM (GGUF) models from @huggingface directly, and if you are lucky some of them might work on your phone, and you can chat with them :)

Ollama Model manager

Remember one of the last newsletters about Ollama?

Ollama is an easy plug-and-play desktop tool to download and run quantized AI models locally on your PC. It is updated constantly and it is able to support new models and architectures. Now there is a CLI tool to manage all your Ollama models on your machine. Here some cool features:

List and Download Remote models from Ollama library

Batch cleanup existing Ollama models

Fuzzy Search

It is super easy 🚀 Installation. You can get started with:

pip install ollama-managerYou can check it out how to use it in the official GitHub repo, here.

HuggingFace TB released SmolTalk v1.0

Hugging Face TB Research is a team in the Hub dedicated to open-source project for the Community. The Team explores synthetic datasets, generated by Large Language Models (TB is for Textbook, as inspired by the “Textbooks are all your need” paper).

SmolLM2 is a family of compact language models available in three size: 135M, 360M, and 1.7B parameters. The 1.7B variant demonstrates significant advances over its predecessor, particularly in instruction following, knowledge, reasoning, and mathematics. The context length is extended up to 8k (8192 tokens) and the overall accuracy is improved quite a lot! Don’t just trust my words! You can read more here…

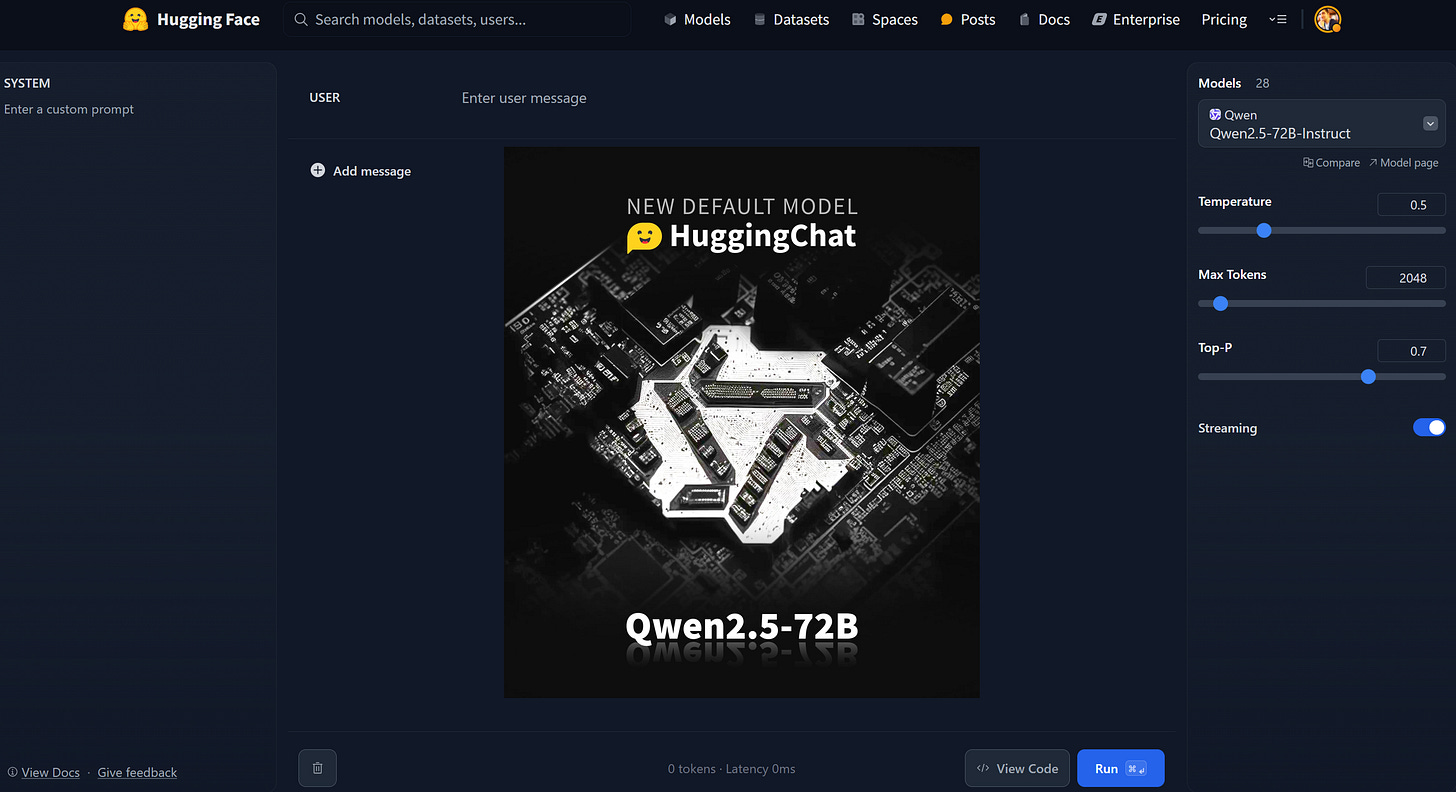

This week Hugging Face TB Research team released the entire dataset used to train their models. It is a 1M samples instruct dataset completely open-source!

pip install transformers

from datasets import load_dataset

ds = load_dataset("HuggingFaceTB/smoltalk", "all") You can even browse this treasure directly on the Hugging Face Dataset page: https://huggingface.co/datasets/HuggingFaceTB/smoltalk…

Interesting thing is that this dataset It's made of 4 parts:

Smol-Magpie-Ultra: 400K samples generated using Magpie

Smol-contraints: a 36K that trains models to follow specific constraints

Smol-rewrite: an 50k focused on text rewriting tasks

Smol-summarize: an 100K for email + news summarization

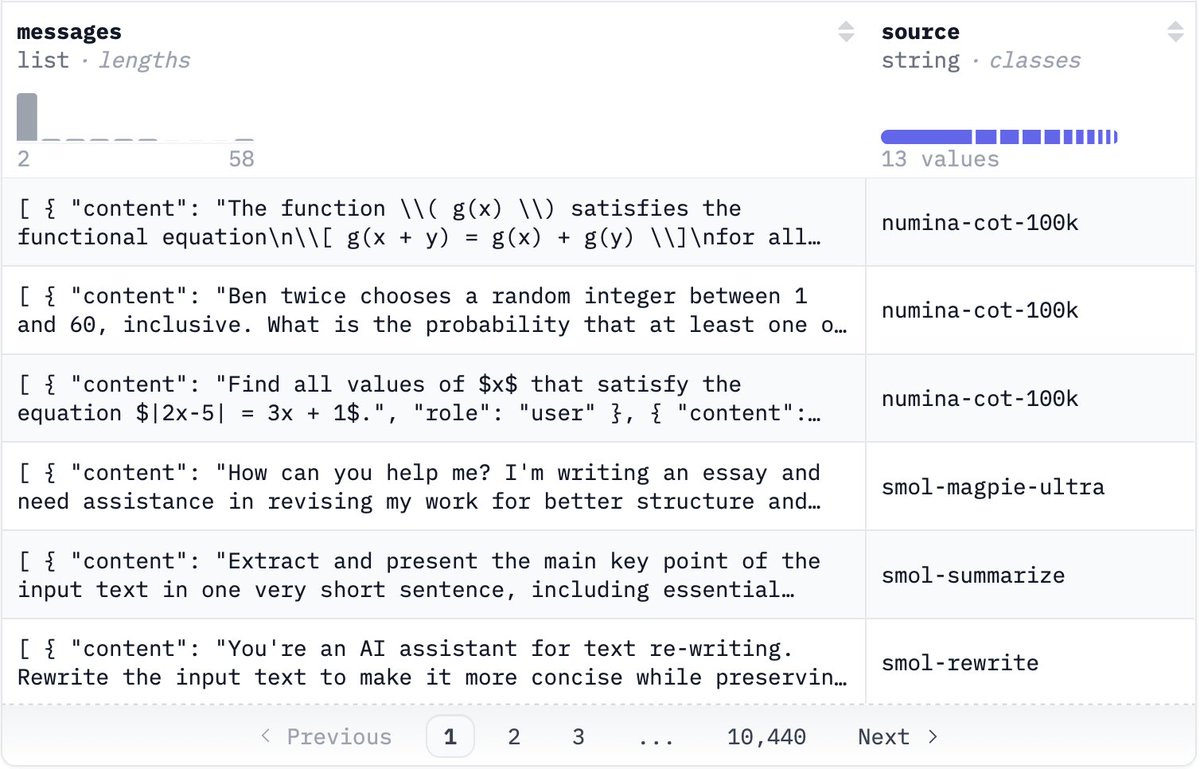

Qwen2.5-72B-Instruct for free on HuggingChat

Now you can have your fully open-source and free powerful LLM directly on Hugging Face.

You simply need to go on HuggingChat, select the Qwen model in the upper-right dorp-down, and BOOM your totally free ChatGPT chatbot is up and running.

This is a real step forward. In all official benchmarks the newest Alibaba Cloud models are showing amazing performance, and these model have a huge context length (128k tokens). What are you waiting for?

Large Language Models explained briefly

If you are a curios learner, it is always good to not give anything for granted. On of the coolest and easy to understand creator on YouTube about AI is Grant Sanderson, also known to the world as 3Blue1Brown.

I warmly suggest you to subscribe to his channel. His videos are no fuss, no clickbait, but pure highly curated explanations on AI and math.

In his latest video you can find the best ever made introduction to what a Large Language Model is and how it works!

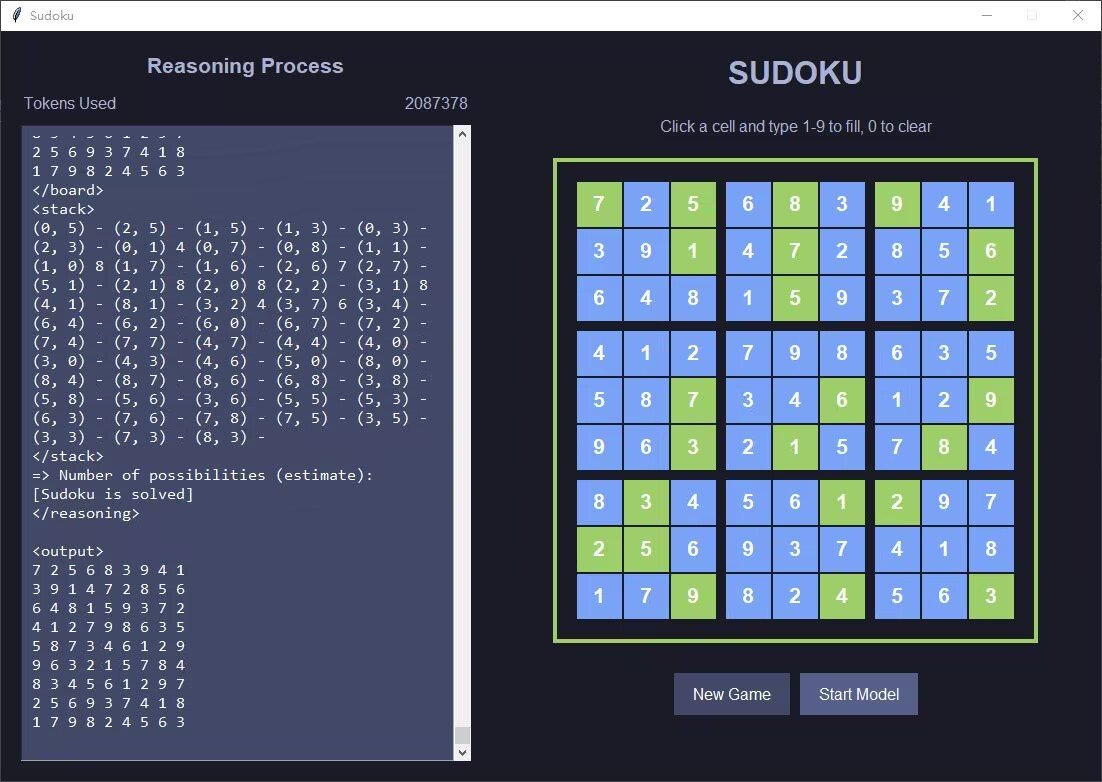

A Sudoku solver with 12M parameters LLM!

It may seem strange to you. Why the hell are you talking about a topic like this?

Well, to be honest this kind of models are typically Machine Learning pipelines, not Large Language Models. But here we have a Super Tiny Language model, with RWKV architecture, that is able to solve highly complex Sudoku games with only 12 Million parameters.

What it matters is the method Jellyfish042 used to achieve such a feat! He took a RNN architecture based on RWKV-6 (not a machine learning pipeline) and used a Chain of Thought prompt to solve the quizzes.

The amazing things about RWKV models is that they combine the strength of both Transformers and RNN architectures. Regardless of the context length you always have:

constant speed

constant vram

You can find the Model and more about it in the GitHub repository here.

As promised here the gift of the week. A full tutorial to install and run LLMs on your mobile phone (iOS or Android) using PocketPal AI.

This is only the start!

Hope you will find all of this useful. Feel free to contact me on Medium.

I am using Substack only for the newsletter. Here every week I am giving free links to my paid articles on Medium.

Follow me and Read my latest articles https://medium.com/@fabio.matricardi

Check out my Substack page, if you missed some posts. And, since it is free, feel free to share it!